IntelligentCarpet: Inferring 3D Human Pose from Tactile Signals

Yiyue Luo

Yunzhu Li

Michael Foshey

Wan Shou

Pratyusha Sharma

Abstract

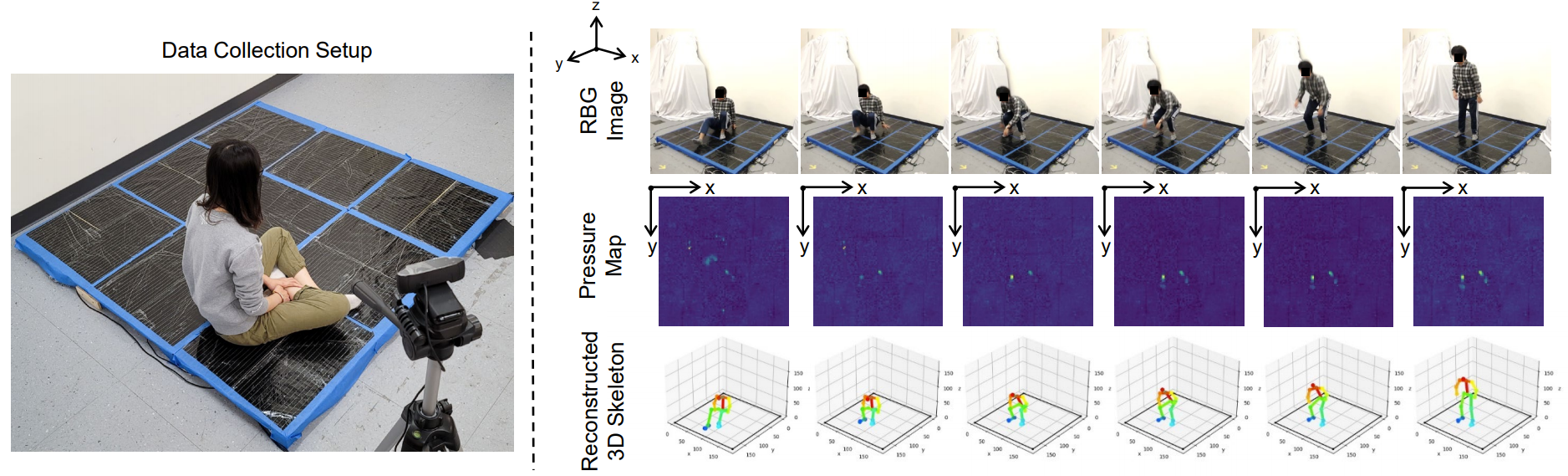

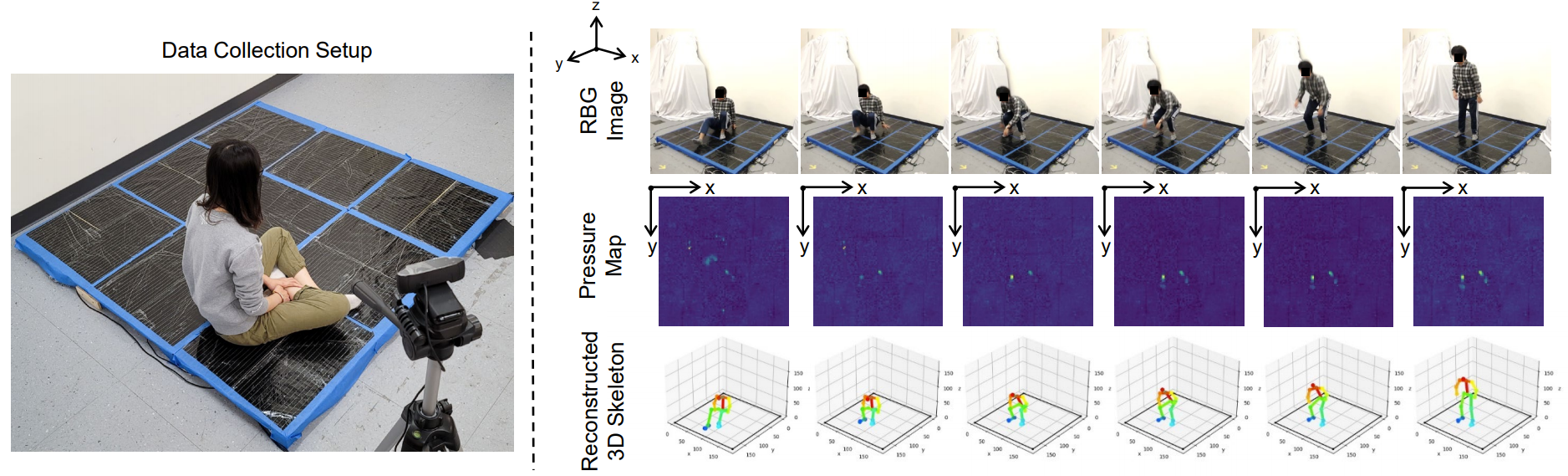

Daily human activities, e.g., locomotion, exercises, and resting, are heavily guided by the tactile

interactions between human and the ground. In this work, leveraging such tactile interactions, we propose

a 3D human pose estimation approach using the pressure maps recorded by a tactile carpet as input. We build

a low-cost, high-density, large-scale intelligent carpet, which enables the real-time recordings of

human-floor tactile interactions in a seamless manner. We collect a synchronized tactile and visual dataset

on various human activities. Employing state-of-the-art camera-based pose estimation model as supervision,

we design and implement a deep neural network model to infer 3D human poses using only the tactile information.

Our pipeline can be further scaled up to multi-person pose estimation. We evaluate our system and demonstrate

its potential applications in diverse fields.

Paper

IntelligentCarpet: Inferring 3D Human Pose from Tactile Signals

Y.Luo, Y.Li, M. Foshey, W. Shou, P. Sharma, T. Palacios, A. Torralba, W. Matusik

CVPR 2021

[Paper]

[Dataset]

[Code]

Video

Press

Related Project

Learning Human-environment Interactions using Conformal Tactile Textiles

Y.Luo, Y.Li, P. Sharma, W. Shou, K. Wu, M. Foshey, T. Palacios, A. Torralba, W. Matusik

Nature Electronics

[Project page]

Learning the Signatures of the Human Grasp Using a Scalable Tactile Glove

S.Sundaram, P. Kellnhofer, Y.Li, J. Zhu, A. Torralba, W. Matusik

Nature, 569 (7758), 2019

[Project page]